TikTok’s implementing additional generative AI transparency measures, including new labels on content that’s been modified by AI via external tools, and new media literacy resources to help users better understand how generative AI is being used and misused on the web.

First off, on its new AI labels. TikTok’s working with the Coalition for Content Provenance and Authenticity (C2PA) to establish a new process for identifying AI in content that’s been uploaded from other sources, which will now be tagged as such in-stream.

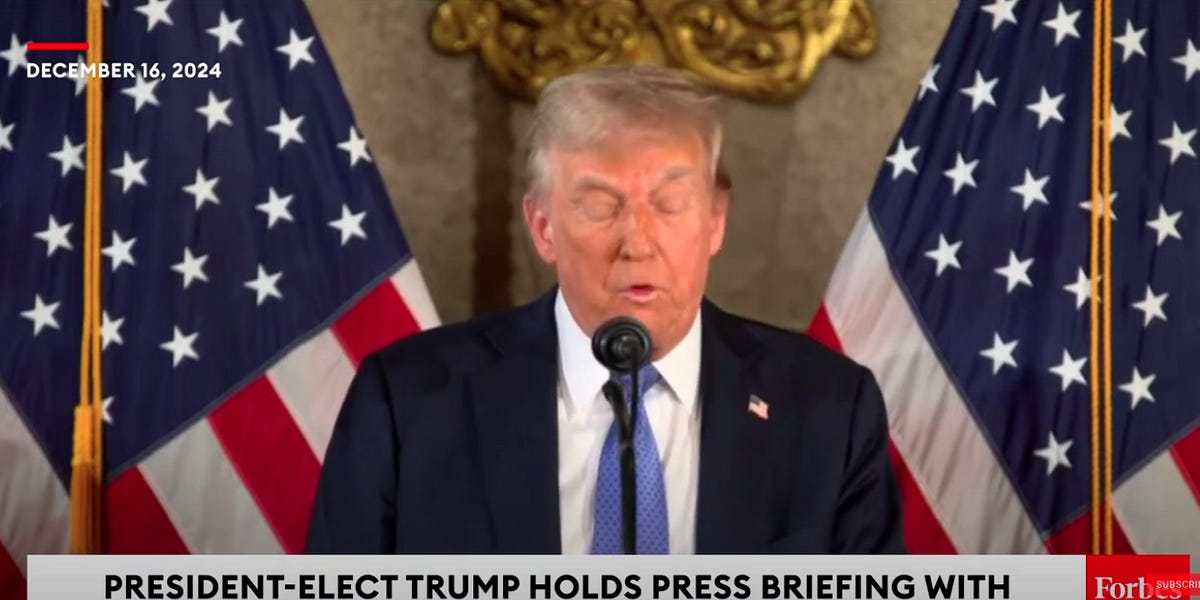

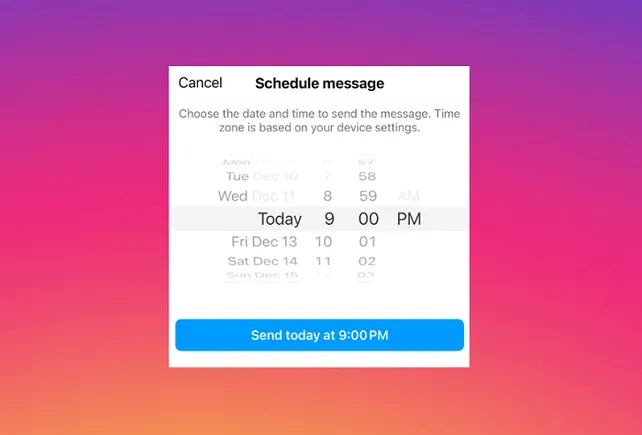

As you can see in this example sequence, now, videos uploaded to TikTok will be scanned for AI markers, under the C2PA project, and will be highlighted as such in-stream when detected.

As explained by TikTok:

“[The C2PA project] attaches metadata to content, which we can use to instantly recognize and label AI-generated. This capability started rolling out today on images and videos, and will be coming to audio-only content soon. Over the coming months, we’ll also start attaching [C2PA labels] to TikTok content, which will remain on content when downloaded. That means that anyone will be able to use C2PA’s Verify tool to help identify AIGC that was made on TikTok and even learn when, where and how the content was made or edited.”

TikTok has already implemented compulsory labels for content created with its own, in-stream generative AI tools, in order to provide direct transparency on clips.

The new measures will give users even more oversight into the use of other AI tools, which should help to limit misinformation and misunderstanding around such.

Though of course, it’s not foolproof. Various tech platforms are participating in the C2PA project, including Google, OpenAI, Microsoft and Adobe, but not all generative AI projects are signed up, and therefore won’t necessarily include the required metadata markers.

But it’s another step towards enhancing AI transparency, and given the rising tide of AI images infiltrating the web, it’s important that all efforts are made to increase AI transparency, and awareness of generated fakes.

TikTok says that it’s the first video sharing platform to put these new measures into practice.

In addition to this, TikTok’s also working with MediaWise to develop new generative AI media literacy campaigns, in order to help users understand and identify AI content.

“We’ll release 12 videos throughout the year that highlight universal media literacy skills while explaining how TikTok tools like AIGC labels can further contextualize content. We’ll also be launching a campaign to raise awareness around AI labeling and potentially misleading AIGC, with a series of videos.”

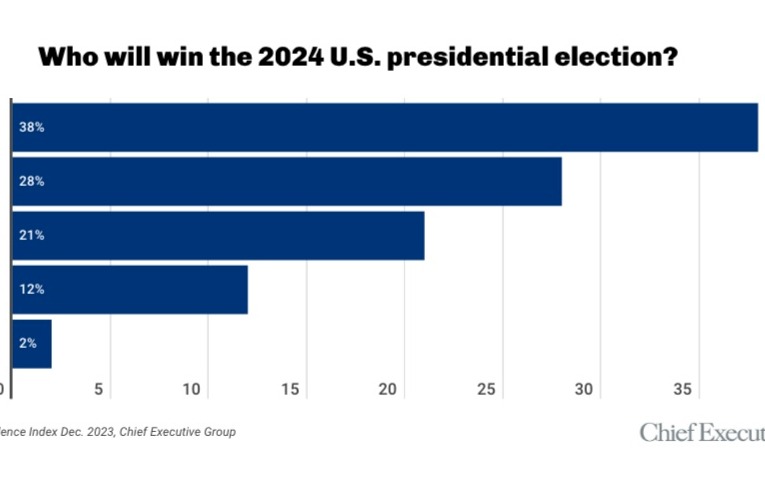

Again, given the increasing stream of AI content flowing across the web, it’s important that all steps are taken to improve awareness of fakes, and highlight their potential misuse, in order to avoid confusion. That’s especially important heading into elections, with AI fakes set to be used to stoke fears, and prompt voter action, based on misleading, but convincing, AI generations.

As such, this is an important step, and it’s good to see TikTok taking additional measures to improve AI literacy.