NurPhoto via Getty Images

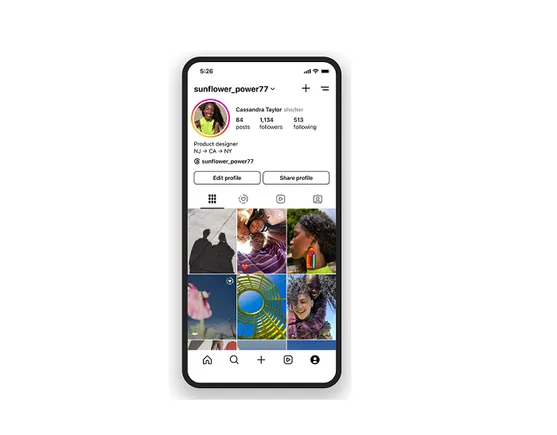

DNew research shows that young Instagram users are still able to quickly access drug-related content despite some safety concerns and increased political pressure. A report from the Tech Transparency Project, an independent tech watchdog, found that some accounts are actually selling illegal substances such as MDMA.

While Instagram has made efforts to curb drug-related hashtags, which remain a core element of the app’s architecture, the group’s research found it was possible to find drug content simply by dropping the hashtag in searches. Searching for “mdma for sale,” not “#mdma,” turned up multiple accounts peddling the drug, the researchers say. This also worked when searching for “oxy”—shortened slang for the opioid oxycontin—and “Xanax,” the anti-anxiety medicine.

Tech Transparency Project’s latest research follows an earlier study published by the group in December. It detailed how teenagers can find drug content online and sometimes buy drugs via Instagram. Officially, of course, selling drugs isn’t allowed on Instagram, and Instagram chief Adam Mosseri reiterated the policy during Congressional testimony in December. Instagram removed drug-related hashtags and added warning prompts on drug-related searches. These prompts provide links to alternative websites. Those efforts aren’t enough, says Katie Paul, the Tech Transparency Project’s director. “Instagram is opposed to actually doing something that will materially address these harms on its platform because they don’t want to cut into their bottom line,” reducing the amount of time a user might spend on Instagram with greater content controls, she says.

The new Tech Transparency Project research highlights the thorny nature of Instagram’s dilemma. It has taken some measures to clean up its app and better protect young users, but the platform remains a place susceptible to misuse and rule-breaking—where Instagram, seeking to police a place with roughly 1 billion monthly users, moves to quash one problem and another (or several) arise someplace else. The app has drawn particular fire from lawmakers over the past year after the Facebook Papers leak revealed internal research into the app’s affects on young people’s mental health. According to the research, it did have negative effects on teens. Instagram sought to deny that research and said it was based on small samples.

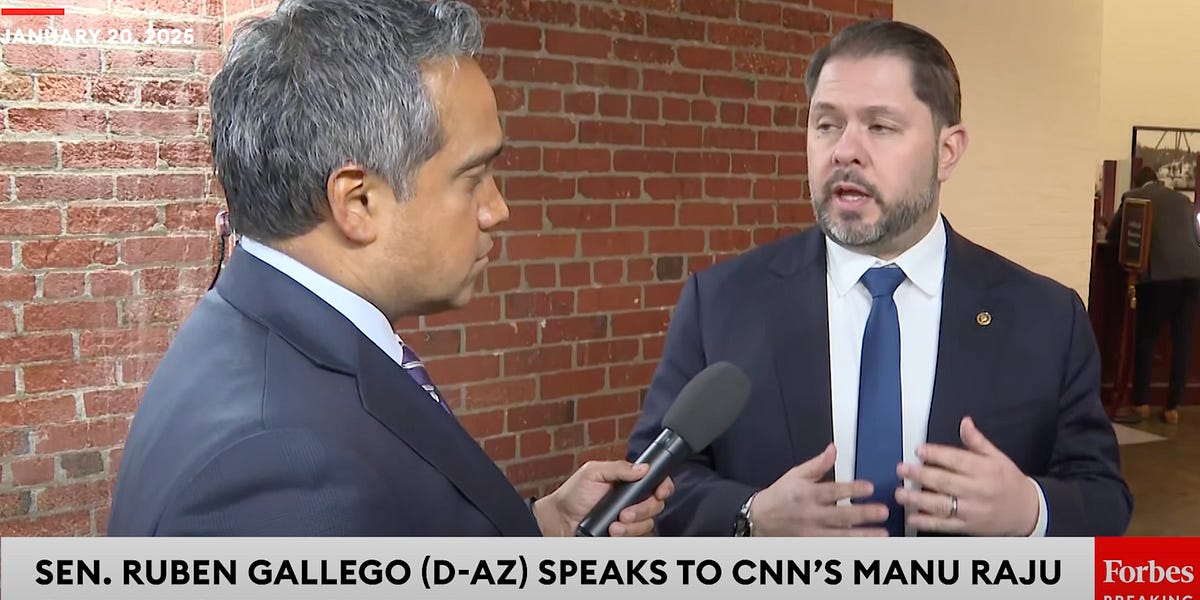

To study drugs on Instagram and teens, the Tech Transparency Project created a series of dummy accounts registered as teenage users, testing the app’s protections for teens. Similar research was done by congressional staff and the results were used to critique Instagram and Meta, when Meta executives made their way onto the Hill.

The new Tech Transparency Project research also found holes in Instagram’s hashtag policies. For instance, #fetanyl was blocked, but #fetanylcalifornia wasn’t, and searching “#fetanylcalifornia” produced accounts the researchers say sold the opioid. While #Xanax couldn’t be found on a desktop search, it could still be searched via mobile. In another example, “#opiates” returned no search results, but Instagram then suggested #opiatesforsale.

Here’s another place where Instagram’s algorithm worked against the app’s ostensible safety practices: When a dummy Tech Transparency Project account run followed @silkroadpharma.cy—a seller of Adderall and the hallucinogen PCP, the researchers say—Instagram recommended other drug-related accounts, including @calipills_415. The latter advertised “discrete shipping” across America, according to the research.

In another moment, a dummy Tech Transparency Project account followed a purported drug-dealing account, @despasitro, and was prompted to follow another, @xanaxsubutexoxycodone. The Instagram profile picture for @xanaxsubutexoxycodone? An ink-white heart is placed next to small bags of plastic.

![LinkedIn Provides Thought Leadership Tips [Infographic] LinkedIn Provides Thought Leadership Tips [Infographic]](https://imgproxy.divecdn.com/sGPjK1VM5eAOI_l-OTkmJTV2S8dHIfUwFmDwPWjhfjg/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9saW5rZWRpbl90aG91Z2h0X2xlYWRlcnNoaXBfaW5mbzIucG5n.webp)

![Google Provides New Overview of Key Display Network Best Practices and Tips [Infographic] Google Provides New Overview of Key Display Network Best Practices and Tips [Infographic]](https://www.socialmediatoday.com/imgproxy/2ddZUevHZXiT7_iRCob1WNh0ZyGb8Dryr-h91-qV8Q0/g:ce/rs:fill:770:364:0/bG9jYWw6Ly8vZGl2ZWltYWdlL2dvb2dsZV9hZF9tYW5hZ2VyMi5wbmc.png)