[ad_1]

Who should decide what can and cannot be shared on social platforms? Mark Zuckerberg? Elon Musk? The users themselves?

Well, according to a new study conducted by Pew Research, a slim majority of Americans believe that social platforms should be regulated by the government.

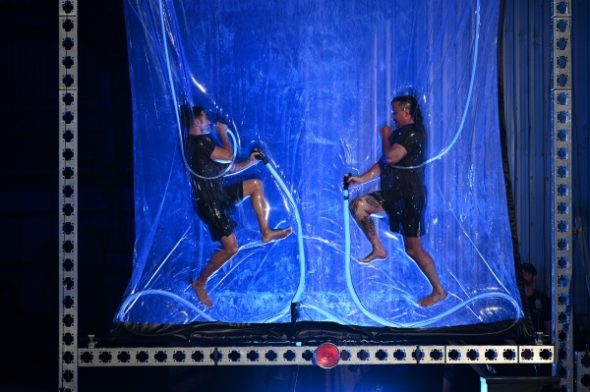

As you can see in this chart, 51% of Americans believe that social platforms should be regulated by an official, government-appointed body, which is down from the 56% who supported the same in 2021.

Which was just after the 2020 election, and the Capital Riots, and in that environment, it makes sense that there was increased concern about the impact of social platforms on political speech.

But now, as we head into another U.S. election, those concerns seem to have eased, though most people still think that the Government should do more to police content in social apps.

The question of social media regulation is complex, because while it’s not ideal to let the executives of mass communication platforms dictate what can and cannot be shared in each app, there are also regional variances and rules in effect, which make universal content approaches difficult.

For years, Meta has argued that there should be more government regulation of social media, which would take the pressure off of its own team in weighing critical questions around speech. The COVID pandemic heightened debate around this element, while the use of social media by political candidates, and the suppression of opposing voices in certain regions, also leaves platform decision-makers in a tough position around how they should act.

Really, Meta, X and all platforms would prefer not to police user speech at all, but advertiser expectations, and government rules, mean that they are compelled to act in some cases.

But really, the decision shouldn’t come down to a few suits in a board room, debating the merits of certain speech.

Which is what Meta’s sought to highlight with its Oversight Board project, where a team of experts are assigned to prosecute Meta’s moderation decisions, based on challenges from users.

The problem is, that group, while operating independently, is still funded by Meta, which many will view as biased to some degree. But then again, any government-funded moderation group will also be accused of the same, dependent on the government of the day, and while it would reduce the decision-making pressure on each individual platform, it wouldn’t lessen the scrutiny of this element.

Maybe, then, Elon Musk is moving in the right path, by focusing on crowd-sourced moderation instead, with Community Notes now playing a much bigger role in X’s process. That then enables the people to decide what’s right and what’s not, what should be allowed and what should be disputed, and there is evidence to suggest that Community Notes has had a positive impact in reducing the spread of misinformation in the app.

Yet, at the same time, Community Notes is currently not able to scale to the level required to address all content concerns, while notes can’t be added fast enough to stop the amplification of certain claims before they’ve had an impact.

Maybe, putting up and downvotes on all posts would be another solution, which could hasten the same process, and see the distribution of misinformation crushed quickly.

But that can also be gamed. And again, authoritarian governments are already very active in seeking to supress speech that they don’t like. Empowering them to make such calls would not lead to an improved process.

Which is why this is such a complex issue, and while there’s a clear desire from the public to enact more government oversight, you can also see why governments would be keen to remain distant from the same.

But they should take responsibility. If regulators are implementing rules that recognize the power and influence of social platforms, then they should also be looking to enact moderation standards along the same lines.

That would then mean that all questions around what’s allowed and what’s not would be deferred to elected officials, and your support or opposition for such could be shared at the ballot box.

It’s not a perfect solution by any means, but it does seem better than the current variance in approaches.

[ad_2]

Original Source Link