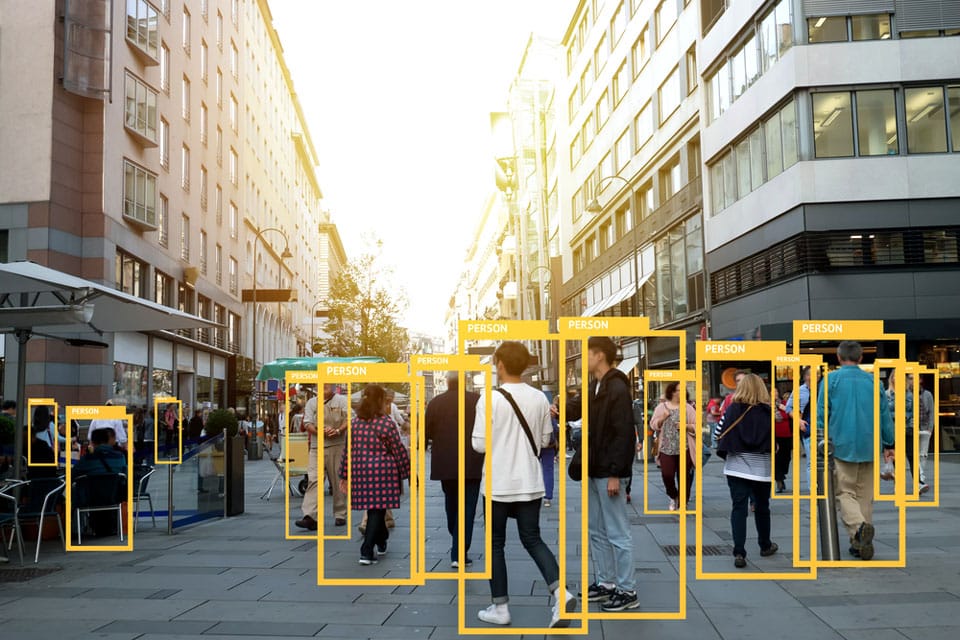

Natural Language Processing (NLP) is an area of Artificial Intelligence (AI) that enables machines to understand human language. NLP combines the power of linguistics and computer science to investigate the laws and structure of language and construct intelligent systems capable of interpreting, analysing, and extracting meaning from text and speech.

To comprehend its structure and meaning, NLP analyses numerous components of human languages, such as syntax, semantics, pragmatics, and morphology. Then, this linguistic information is transformed using computer science into rule-based natural language processing machine learning algorithms that can solve specific issues and complete jobs.

Take, for example, Gmail. Emails are automatically categorised as promotions, social, primary, or spam, thanks to an NLP technique called keyword extraction. By “reading” phrases in subject lines and connecting them with specific tags, machines automatically determine which category to assign emails to.

NLP Benefits

NLP technology can analyse text at scale on various documents, internal systems, emails, social media data, online reviews, and more. Process massive amounts of data in seconds or minutes, whereas manual analysis would take days or weeks. NLP tools may instantly scale up or down to meet your demands, ensuring you have as much or as little computational capacity as you require.

- Get a more objective and precise assessment

Humans are prone to making mistakes or having inherent biases while performing repetitive jobs, such as reading and analysing open-ended survey replies and other text data.

NLP-powered tools can be trained to your company’s language and requirements in minutes. So, once they’re up and going, they’ll perform far better than humans. You can also update and train your natural language processing models as your business’s marketplace or language changes.

- Streamline processes to save money

NLP tools operate at any scale you require, 24 hours a day, seven days a week, in real-time. Manual data analysis would need at least a couple of full-time people, but with NLP SaaS solutions, you can limit your personnel to a bare minimum. When you connect NLP tools to your data, you’ll be able to analyse consumer feedback on the go, allowing you to spot flaws with your product or service immediately.

To streamline operations and spare your agents from monotonous chores, use NLP solutions like MonkeyLearn to automate ticket tagging and routing. Also, stay abreast of new trends as they emerge.

- Boost client satisfaction

To ensure that a customer is never left hanging, NLP tools let you automatically evaluate and filter customer care issues by topic, intent, urgency, sentiment, etc. and route them to the appropriate division or individual.

The ability to monitor, route, and even respond to customer support requests automatically is made possible by MonkeyLearn’s connections with CRM platforms like Zendesk, Freshdesk, Service Cloud, and HelpScout. You can quickly learn how satisfied consumers are at every point of their journey by doing NLP analysis on customer satisfaction surveys.

The marketing industry is significantly impacted by natural language processing. You’ll have a better grasp of market segmentation, be better able to target your customers directly and see a decrease in customer turnover when you use NLP to learn the language of your customer base.

Some NLP segments are created for specific datasets that we refer to as directed data. They go under the name natural language processing models. The best NLP models available will be examined in this blog. Let’s look at what NLP models are before we get started.

What Do NLP Pre-Trained Models Consist Of?

Pre-trained models (PTMs) for NLP are deep learning models that have been trained on a large dataset to perform specific NLP tasks. PTMs can learn universal language representations when they are trained on an extensive corpus. This can aid with downstream NLP tasks and avoid the need to introduce new models from the start.

Thus, pre-trained models can be reusable natural language processing models that NLP developers can use to create an NLP application rapidly. For a range of NLP applications, such as text classification, question answering, machine translation, and more, Transformers provides a collection of pre-trained deep learning NLP models.

You don’t need any prior NLP understanding to use these pre-trained NLP tasks, which are free. The first generation of pre-trained models was instructed to learn appropriate word embeddings.

With minimum effort on the side of NLP developers, NLP activities can be carried out using NLP models quickly loaded into NLP libraries like PyTorch, Tensorflow, and others. Pre-trained models are used more frequently on NLP tasks since they are easier to deploy, more accurate, and take less time to train than custom-built models.

Leading Natural Language Processing Models

A pre-trained BERT model analyses a word’s left and right sides to infer its context. BERT ushers in a new era of NLP since, despite its accuracy, it is based on just two ideas.

The two essential steps of BERT are pre-training and fine-tuning. In the first stage of the model, BERT is trained on unlabeled data using a variety of training problems. Two unsupervised tasks are completed to achieve this:

ML masked– A deep bidirectional model is randomly trained by obscuring some input tokens to prevent cycles where the processed word can see itself.

Next sentence prediction– Each pre-train set is utilised 50% of the time in this challenge. Sentence S2 is categorised as IsNext when sentence S1 comes after it. If S2 is a random sentence, on the other hand, it will be marked as NotNext.

It can then be finished up and fine-tuned. All the model’s parameters are enhanced using labelled data at this stage. This tagged data was provided by “downstream tasks.” Every downstream job is a distinct model with its own set of parameters.

Numerous tasks, such as named entity recognition and question-answering, can be accomplished using BERT. The BERT model can be implemented using either TensorFlow or PyTorch.

A transformer-based NLP model GPT-3 can unscramble words, compose poetry, answer questions, solve clozes, and perform other tasks that call for quick decision-making. The GPT-3 is also used to write news articles and create codes, thanks to recent developments.

The statistical dependence between words can be handled using GPT-3. Over 175 billion parameters and 45 TB of text collected from across the web were used to train it. One of the complete pre-trained NLP models available is this one.

Unlike other language models, GPT-3 doesn’t need to be tweaked to fulfil tasks further down the line. Thanks to its “text in, text out” API, developers can reprogramme the model using instructions.

A group of scholars from Carnegie Mellon University and Google developed XLNet. It handled everyday natural language processing tasks, including text classification and sentiment analysis.

Transformer-XL and BERT’s best qualities are combined in XLNet, a generalised autoregressive model that has already been trained. XLNet uses the language model of Transformer-autoregressive XL and the autoencoding of BERT. The fact that XLNet was developed to combine the most significant traits of Transformer-XL and BERT without the downsides is its main advantage.

Similar to BERT, the core of XLNet is bidirectional context analysis. This suggests that to determine what the token being analysed might be; it considers both the words before and after. Beyond that, XLNet determines a word sequence’s log-likelihood about all its potential permutations.

XLNet overcomes BERT’s shortcomings. It is not impacted by data corruption because it is an autoregressive model. In terms of performance, experiments have revealed that XLNet is superior to both BERT and Transformer-XL.

To enhance BERT’s performance and address some of its shortcomings, RoBERTa is a natural language processing model built on top of BERT. As a result of the collaboration between Facebook AI and the University of Washington, RoBERTa was developed.

Bidirectional context analysis’ performance was analysed, and the study team found several modifications that might be made to enhance BERT’s performance. For example, the model could be trained using a more extensive, new dataset without the following sentence prediction.

The result of these modifications is RoBERTa, which stands for Robustly Optimized BERT Approach. BERT and RoBERTa are different in the ways that follow:

- There is a 160GB larger training dataset available.

- The more extended training period was caused by the larger dataset and 500K iterations.

- The section of the model that predicted the following sentence had been eliminated.

- Altering the training data’s LM masking algorithm.

The PyTorch package includes the RoBERTa implementation, made available as open-source on Github.

Conclusion

Pre-trained language models have many benefits, which are easily understood. Thankfully, these models are available to developers, enabling them to get precise results while saving time and resources when creating AI applications. But which NLP language model suits your AI project the best? The size of the project, the kind of dataset, the training methodology, and several other elements all have a role in answering that question.