Everyone is talking about AI, but what does the future hold? In a series of interviews we examine unexpected perspectives on the space.

One of the biggest questions in martech is how artificial intelligence will affect it. Not in five or 10 years, but right now. To try and find out, we are doing a series of interviews with experts in the field. Our first is with Chris Penn, co-founder and chief data scientist at TrustInsights.ai.

Q: Because many, many marketers are still figuring out generative AI, what is an essential thing marketers need to understand about it?

A: These are language models. They’re good at language, but, by default, then they’re not good at things that are not language, like they can’t count. They are terrible at math. But it turns out that language is the underpinning for pretty much everything. So even though it can’t do math, it can write code because code is a language and code can run math.

What this means practically is that every software package and every software company out there that has either an internal scripting language or an API is or should be putting together a language model within their products.

Look at Adobe. It has its scripting language inside its products. And what has Adobe done? They’ve integrated generative AI prompting in Firefly and their software. So when you interact with it, it is essentially taking your prompt and rewriting it to its own scripting language and then executing commands. So it’s operationally better.

Dig deeper: Decoding generative AI: How to build a basic genAI strategy for your marketing organization

That’s what Microsoft has done with everything. If you look at how Bing works, Bing does not ask the GPT4 model for knowledge. Bing asks us to translate into Bing queries that then go into Bing and then the results come back and has it rewrite those.

And so the big transition that’s happening, and has to happen in the martech space now, is that if you’ve got an API, you should have a language model talking to that API and that output should be exposed to the customer.

One of the first folks to do this was Dharmesh Shah over at HubSpot. I remember the day that GPT3 Turbo came out he was like, “We’re putting this out, I’m making a chatbot.” And he demoed the alpha and he was like, “Yeah, it sucks. It’s broken eight ways to Sunday. But this is where we know this is where it’s going.”

So if you are a martech company and you are not putting a generative prompting layer inside your software, you’re done. Just sell the company now.

Q: OK, now that I know that, what should I know when using AI?

A: So there are two things that everybody needs to have to make the most of generative AI. No. 1 is data because these tools work best with the data you give them. They’re not as good at generating as they are at comparing — comparing and analyzing and stuff like that.

Two is the quality and quantity of ideas that you have and you have to have both. That is what is different. The skill doesn’t matter anymore, right? If you have an idea for a song, but you can’t play an instrument, fine, the machine will help you. It has the skill; you have to bring the idea when it comes to code.

I can say just from personal experience, I am coding things, coding things today at a pace that I would never have been able to do even six months ago. I wrote a recommendation engine in an hour and a half in Python, a language that I don’t know how to code in and it works really well.

Now, here’s the thing, I know what questions to ask because I have a domain understanding of the domain of how coding works. Even if I don’t know the syntax, I know what this data structure should look like and please don’t rerun this vector routine every single time. That’s stupid, run it once, make a library store it.

Q: Many of us don’t know that.

A: Yes. No one is teaching this. So this is one of the big issues right now. And it’s going to contribute substantially to the problems that generative AI causes.

Our society’s education systems are already not preparing students for any of modern reality. And it’s now made worse by the fact that many of the tasks that we associate with academic rigor are essentially moot, like writing term papers. A machine can write a better term paper than you can. Period. End of discussion.

And so critical thinking is one of those things that is just not taught. In fact — and this gets into tinfoil hat territory — the design of our education system is from the 1920s. Rockefeller, Carnegie and Mellon basically helped to design the modern education system to make smart factory workers. And we never have evolved past that. And we are now seeing the limitations of that exposed with generative AI. It turns out that the factory worker mindset is also what AI is really good at.

So companies need to be thinking about how do we train employees to think critically? How do we train employees to be rapid idea generators and then partner with AI tools to bring those ideas to life quickly?

The tools give us agility, they give us speed, but they don’t necessarily give us creative ideas because they’re always a mathematical average of whatever we put into them. So that’s where the big gaps are.

And the companies that are doing best with this right now are people who have a good bench of forward-thinking, gray-haired people. People who have hair that’s colored like ours, but are not afraid of the technology and are willing to embrace the technology. So we bring our experience to the table combined with the power of the tools to generate incredible results that a junior person doesn’t have the experience for, even if they have the technological savvy. That’s the gap.

Q: I have the right hair color and I’m definitely a technophile: Do I need to learn coding?

A: Even though it’s laborious, I actually think learning how to code is not a bad thing. There’s one book in particular that I recommend that people read. It’s called “Automate the Boring Stuff with Python.” It’s one of those books where you can read it, you don’t need to actually do the coding examples because again, ChatGPT can do that, but it will introduce you to control structures, loops, data storage, file transformation stuff.

A: What new capabilities can we expect to see very soon?

Q: There are a few things that are happening right now. One is that the current multimodal models really are hack jobs. They’re like essentially a bad ensemble kind of hooked together things. You can see this if you use ChatGPT with the DALL.E image generator. You can talk to it, but it clearly does not understand what it’s looking at. There’s no return path for it to look at the image it created and go, “Oh yeah, there actually are five people and you requested four in this image.” So it’s really stupid.

Dig deeper: 5 ways CRMs are leveraging AI to automate marketing today

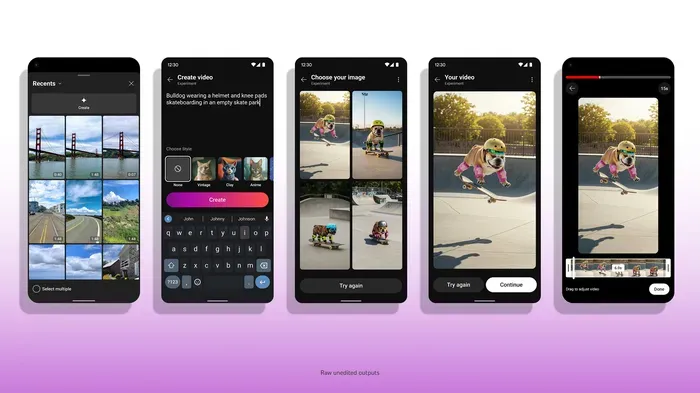

But you are starting to see action here. There is already a true multimodal model. It’s not very good, but it does work. And so, expect multimodality to be substantially improved over the next 12 months to the point where you will have round-trip modeling. You can put in a video, get text, then give some more text and have it modify the video and so on and so forth, all within the same model.

We are seeing new model architectures. One is a mixture-of-experts (MOE) model. The idea of the mixture-of-experts model is going to create better outputs. With the MOE they took out the feed-forward network inside of a language model and replaced it with a mixture of experts. So they’re essentially baking in a model’s ability to kind of spawn instances and talk to itself internally and then generate output based on the internal conversation.

Real simple example: You have a language model that’s designed to generate language, then you have another expert within the same architecture, the same meta-model if you will, that’s a, a proofreader that says, “Hey, that’s racist, try again.”

Another thing I saw in a paper published yesterday is they’ve gotten almost GPT4-quality in an open-source package that you can run on 40 gigabytes of RAM. So, a topped-out Macbook. can run this thing that previously you would need a server room for.

The third thing is the agent network. So agent networks, things like auto gen and lang gen, you’re starting to see real ecosystems developing around language models where people are using it as part of an ensemble of technologies to generate through apps.

One of the things that people are very short-sighted about right now is they kind of assume a language model can do everything and we’re like, no, it’s a language model, it does language. And you want to read your database, that’s not what it does.

Take Martech’s 2024 Salary and Career Survey

From AI to layoffs, it’s been quite a year. We’d like to know how it’s been for you. Please take this short survey so we can have your input on the state of martech salaries and careers.

However, with things like retrieval augmented generation, with things like API connectors, you’re now seeing a language model being where it’s part of your computer architecture. Andrej Karpathy was showing this recently in a talk he gave about the language model OS where you have a language model over the heart of an operating system for a computer.

And I think that’s a place where we already see this happening now, but that’s going in the next 12 months. You’re going to see much more advancement in those architectures and the portability of them.

There’s a tool called Llamafile released by the Mozilla Foundation that can take a language model and essentially wrap it up in an app structure. And now it’s just a deployable executable binary. So you could build a custom model, tune it, put it in an app, and put it on a flash drive now. You can hand it to someone and say, “Here you can use this thing as is, no external compute required and it really makes these things portable.

The thing I’ve got my eye on very sharply is open source. What’s happening with the open weights models and the way developers are expanding the capabilities far beyond what the big tech companies are releasing now. OpenAI and Google and Meta and all stuff may have some of these same technologies and capabilities in their labs. But we’re seeing it happening on Github and on Hugging Face today, you can download it and mess with it. It’s pretty amazing.

Q: What risks should marketers keep in mind when it comes to AI?

A: The big thing is this: Under no circumstances should you let a generative AI model talk to the customer without supervision. But a lot of people are copying and pasting straight out of ChatGPT onto their corporate blog which is always amusing. You cannot have these tools operate in solo mode, unsupervised.

Now there are asterisks and conditions where you can make that work. But, in general, that is true from a brand perspective. The bigger harm really is just using these tools thoughtlessly. I talked to one person not too long ago, who said “We just laid off 80% of our content marketing team because we could just have AI do it.”

I’m like, “Well, I will see you in one quarter when you have to rehire all those people at three times what you used to pay them as consultants because the machines actually can’t do what you think they can do.”

And there’s a lot of that very short-sightedness. So it’s much more of a strategic issue in terms of executives and stakeholders understanding what the tools can and can’t do and then right-sizing your capabilities based on that. People assume these things are like all-seeing magic oracles like no, it’s a word prediction machine. That’s all that it does.

The post Chris Penn: Looking forward with AI appeared first on MarTech.

![Assessing the Winners of the Super Bowl Ad Blitz [Infographic] Assessing the Winners of the Super Bowl Ad Blitz [Infographic]](https://imgproxy.divecdn.com/kzzGkWf5O2q5NPX8no-8ErGd5bFiXZjPZlEp8PIZVsw/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9zZW1ydXNoX3N1cGVyX2Jvd2xfMjAyNTIucG5n.webp)