I use AI tools like ChatGPT, Gemini and Copilot to explore career plans, obligations, ambitions and even moments of self-doubt. It’s not just about finding answers — it’s about gaining clarity by seeing your ideas reflected, reframed or expanded.

Millions rely on AI for guidance, trusting these systems to help navigate life’s complexities. Yet, every time we share, we also teach these systems. Our vulnerabilities — our doubts, hopes and worries — become part of a larger machine. AI isn’t just assisting us; it’s learning from us.

From grabbing attention to shaping intent

For years, the attention economy thrived on capturing and monetizing our focus. Social media platforms optimized their algorithms for engagement, often prioritizing sensationalism and outrage to keep us scrolling. But now, AI tools like ChatGPT represent the next phase. They’re not just grabbing our attention; they’re shaping our actions.

This evolution has been labeled the “intention economy,” where companies collect and commodify user intent — our goals, desires and motivations. As researchers Chaudhary and Penn argue in their Harvard Data Science Review article, “Beware the Intention Economy: Collection and Commodification of Intent via Large Language Models,” these systems don’t just respond to our queries — they actively shape our decisions, often aligning with corporate profits over personal benefits.

Dig deeper: Are marketers trusting AI too much? How to avoid the strategic pitfall

Honey’s role in the intention economy

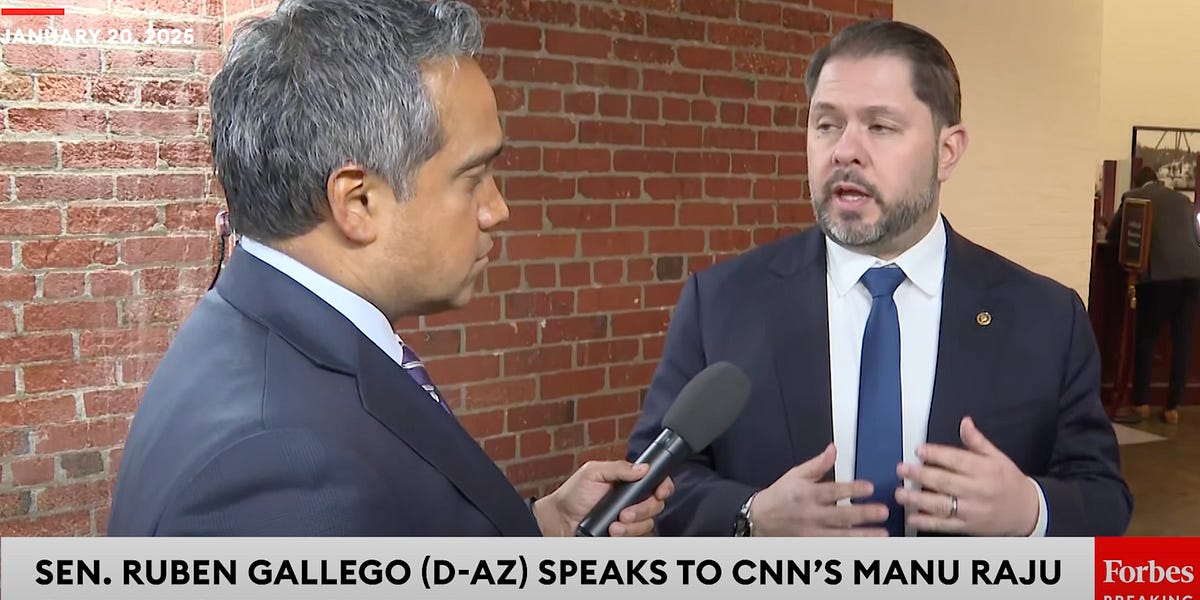

Honey, the browser extension acquired by PayPal for $4 billion, illustrates how trust can be quietly exploited. Marketed as a tool to save users money, Honey’s practices tell a different story. YouTuber MegaLag claimed, in his series “Exposing the Honey Influencer Scam,” that the platform redirected affiliate links from influencers to itself, diverting potential earnings while capturing clicks for profit.

Honey also gave retailers control over which coupons users saw, promoting less attractive discounts and steering consumers away from better deals. Influencers who endorsed Honey unknowingly encouraged their audiences to use a tool that siphoned away their own commissions. By positioning itself as a helpful tool, it built trust — and then capitalized on it for financial gain.

“Honey wasn’t saving you money — it was robbing you while pretending to be your ally.”

– MegaLag

(Note: Some have said that MegaLag’s account contains errors; this is an ongoing story.)

Subtle influence in disguise

The dynamic we saw with Honey can feel eerily familiar with AI tools. These systems present themselves as neutral and free of overt monetization strategies. ChatGPT, for example, doesn’t bombard users with ads or sales pitches. It feels like a tool designed solely to help you think, plan and solve problems. Once that trust is established, influencing decisions becomes far easier.

- Framing outcomes: AI tools may suggest options or advice that nudge you toward specific actions or perspectives. By framing problems a certain way, they can shape how you approach solutions without you realizing it.

- Corporate alignment: If the companies behind these tools prioritize profits or specific agendas, they can tailor responses to align with those interests. For instance, asking an AI for financial advice might yield suggestions tied to corporate partners — like financial products, gig work or services. These recommendations may seem helpful but ultimately serve the platform’s bottom line more than your needs.

- Lack of transparency: Similar to how Honey prioritized retailer-preferred discounts without disclosing them, AI tools rarely explain how they weigh outcomes. Is the advice grounded in your best interests — or hidden agreements?

Dig deeper: The ethics of AI-powered marketing technology

What are digital systems selling you? Ask these questions to find out

You don’t need to be a tech expert to protect yourself from hidden agendas. By asking the right questions, you can figure out whose interests a platform really serves. Here are five key questions to guide you.

1. Who benefits from this system?

Every platform serves someone — but who, exactly?

Start by asking yourself:

- Are users the primary focus or does the platform prioritize advertisers and partners?

- How does the platform present itself to brands? Look at its business-facing promotions. For example, does it boast about shaping user decisions or maximizing partner profits?

What to watch for:

- Platforms that promise consumers neutrality while selling advertisers influence.

- Honey, for instance, promised users savings but told retailers it could prioritize their offers over better deals.

2. What are the costs — seen and unseen?

Most digital systems aren’t truly “free.” If you’re not paying with money, you’re paying with something else: your data, your attention or even your trust.

Ask yourself:

- What do I have to give up to use this system? Privacy? Time? Emotional energy?

- Are there societal or ethical costs? For example, does the platform contribute to misinformation, amplify harmful behavior or exploit vulnerable groups?

What to watch for:

- Platforms that downplay data collection or minimize privacy risks. If it’s “free,” you’re the product.

3. How does the system influence behavior?

Every digital tool has an agenda — sometimes subtle, sometimes not. Algorithms, nudges and design choices shape how you interact with the platform and even how you think.

Ask yourself:

- How does this system frame decisions? Are options presented in ways that subtly steer you toward specific outcomes?

- Does it use tactics like urgency, personalization or gamification to guide your behavior?

What to watch for:

- Tools that present themselves as neutral but nudge you toward choices that benefit the platform or its partners.

- AI tools, for instance, might subtly recommend financial products or services tied to corporate agreements.

Dig deeper: How behavioral economics can be the marketer’s secret weapon

4. Who is accountable for misuse or harm?

When platforms cause harm — whether it’s a data breach, mental health impact or exploitation of users — accountability often becomes a murky subject.

Ask yourself:

- If something goes wrong, who will take responsibility?

- Does the platform acknowledge potential risks or does it deflect blame when harm occurs?

What to watch for:

- Companies that prioritize disclaimers over accountability.

- For instance, platforms that place all responsibility on users for “misuse” while failing to address systemic flaws.

5. How does this system promote transparency?

A trustworthy system doesn’t hide its workings — it invites scrutiny. Transparency isn’t just about explaining policies in fine print; it’s about letting users understand and question the system.

Ask yourself:

- How easy is it to understand what this platform does with my data, my behavior or my trust?

- Does the platform disclose its partnerships, algorithms or data practices?

What to watch for:

- Platforms that bury crucial information in legalese or avoid disclosing how decisions are made.

- True transparency looks like a “nutritional label” for users, outlining who benefits and how.

Dig deeper: How wisdom makes AI more effective in marketing

Learning from the past to shape the future

We’ve faced similar challenges before. In the early days of search engines, the line between paid and organic results was blurred until public demand for transparency forced change. But with AI and the intention economy, the stakes are far higher.

Organizations like the Marketing Accountability Council (MAC) are already working toward this goal. MAC evaluates platforms, advocates for regulation and educates users about digital manipulation. Imagine a world where every platform has a clear, honest “nutritional label” outlining its intentions and mechanics. That’s the future MAC is striving to create. (Disclosure: I founded MAC.)

Creating a fairer digital future isn’t just a corporate responsibility; it’s a collective one. The best solutions don’t come from boardrooms but from people who care. That’s why we need your voice to shape this movement.

Dig deeper: The science behind high-performing calls to action

Contributing authors are invited to create content for MarTech and are chosen for their expertise and contribution to the martech community. Our contributors work under the oversight of the editorial staff and contributions are checked for quality and relevance to our readers. The opinions they express are their own.

![LinkedIn Provides Thought Leadership Tips [Infographic] LinkedIn Provides Thought Leadership Tips [Infographic]](https://imgproxy.divecdn.com/sGPjK1VM5eAOI_l-OTkmJTV2S8dHIfUwFmDwPWjhfjg/g:ce/rs:fit:770:435/Z3M6Ly9kaXZlc2l0ZS1zdG9yYWdlL2RpdmVpbWFnZS9saW5rZWRpbl90aG91Z2h0X2xlYWRlcnNoaXBfaW5mbzIucG5n.webp)