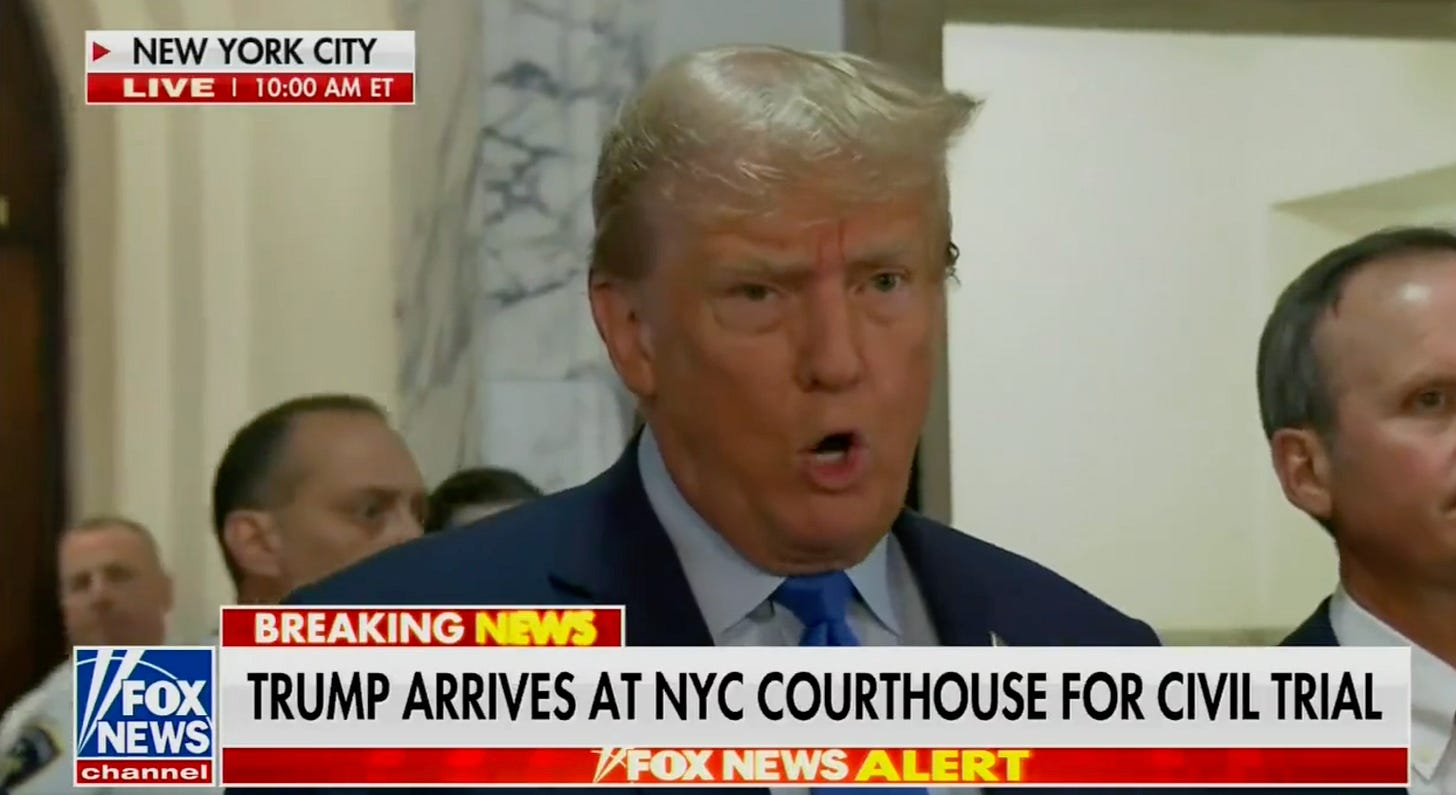

An example of a deepfake and original Facebook CEO Mark Zuckerberg video. (Elyse Samuels/The Washington Post via Getty Images).

The Washington Post via Getty Images

Mark Zuckerberg’s virtual-reality universe, dubbed simply Meta, has been plagued by a number of problems from technology issues to a difficulty holding onto staff. That doesn’t mean it won’t soon be used by billions of people. Meta has been facing a new problem. Is the virtual environment where users can create their own facial designs, the same as for everybody? Or will companies and politicians have greater flexibility to alter who they look like?

Rand Waltzman is a senior information scientist from the non-profit RAND Institute. He warned last week that the lessons Facebook has learned in personalizing news feeds, and allowing hyper-targeted info, could be used to supercharge its Meta. In this Meta, even speakers can be personalized to appear more trustworthy to every audience member. Using deepfake technology that creates realistic but falsified videos, a speaker could be modified to have 40% of the audience member’s features without the audience member even knowing.

Meta has already taken measures to fix the problem. But other companies don’t hesitate. The New York Times and CBC Radio Canada launched Project Origin two years ago to develop technology to prove that a message came from its source. Project Origin, Adobe, Intel and Sony are now part of the Coalition for Content Provenance and Authenticity. Some early versions, including those that track the source of information online, of Project Origin software are already available. Now the question is: Who will use them?

“We can offer extended information to validate the source of information that they’re receiving,” says Bruce MacCormack, CBC Radio-Canada’s senior advisor of disinformation defense initiatives, and co-lead of Project Origin. “Facebook has to decide to consume it and use it for their system, and to figure out how it feeds into their algorithms and their systems, to which we don’t have any visibility.”

Project Origin, which was founded in 2020, is software that allows viewers to determine if the information claimed to have come from a trustworthy news source and to prove it. This means that there is no manipulation. Instead of relying on blockchain or another distributed ledger technology to track the movement of information online, as might be possible in future versions of the so-called Web3, the technology tags information with data about where it came from that moves with it as it’s copied and spread. A version early in the development of this software was made available to members and can be used now, he said.

Click Here to subscribe to the SME CryptoAsset & Blockchain Advisor

Meta’s misinformation issues are more than just fake news. In order to reduce overlap between Project Origin’s solutions and other similar technology targeting different kinds of deception—and to ensure the solutions interoperate—the non-profit co-launched the Coalition for Content Provenance and Authenticity, in February 2021, to prove the originality of a number of kinds of intellectual property. Adobe is on the Blockchain 50 List and runs the Content Authenticity Initiative. This initiative, announced October 2021, will prove that NFTs generated using the software are actually created by the artist.

“About a year and a half ago, we decided we really had the same approach, and we’re working in the same direction,” says MacCormack. “We wanted to make sure we ended up in one place. And we didn’t build two competing sets of technologies.”

Meta recognizes deep fakes. A distrust of information is an issue. MacCormack and Google co-founded the Partnership on AI. This group, MacCormack and IBM advise, was launched in September 2016. It aims to improve the quality of technology that is used to make deep fakes. In June 2020 the results from the Deep Fake Detection Challenge by the social network were released. These showed that fake detection software only 65% was successful.

Fixing the problem isn’t just a moral issue, but will impact an increasing number of companies’ bottom lines. McKinsey, a research company found that metaverse investments for the first half 2022 had already been doubled. They also forecasted that by 2030 the industry would have a value of $5 trillion. It is possible for a metaverse stuffed with fake information to turn this boom into a bust.

MacCormack states that the depth fake software improves faster than implementation time. One reason why they decided to put more emphasis on the ability of information to be proven to have come from the source. “If you put the detection tools in the wild, just by the nature of how artificial intelligence works, they are going to make the fakes better. And they were going to make things better really quickly, to the point where the lifecycle of a tool or the lifespan of a tool would be less than the time it would take to deploy the tool, which meant effectively, you could never get it into the marketplace.”

According to MacCormack, the problem will only get worse. Last week, an upstart competitor to Sam Altman’s Dall-E software, called Stable Diffusion, which lets users create realistic images just by describing them, opened up its source code for anyone to use. According to MacCormack, that means it’s only a matter of time before safeguards that OpenAI implemented to prevent certain types of content from being created will be circumvented.

“This is sort of like nuclear non-proliferation,” says MacCormack. “Once it’s out there, it’s out there. So the fact that that code has been published without safeguards means that there’s an anticipation that the number of malicious use cases will start to accelerate dramatically in the forthcoming couple of months.”

![Key Metrics for Social Media Marketing [Infographic] Key Metrics for Social Media Marketing [Infographic]](https://www.socialmediatoday.com/imgproxy/nP1lliSbrTbUmhFV6RdAz9qJZFvsstq3IG6orLUMMls/g:ce/rs:fit:770:435/bG9jYWw6Ly8vZGl2ZWltYWdlL3NvY2lhbF9tZWRpYV9yb2lfaW5vZ3JhcGhpYzIucG5n.webp)