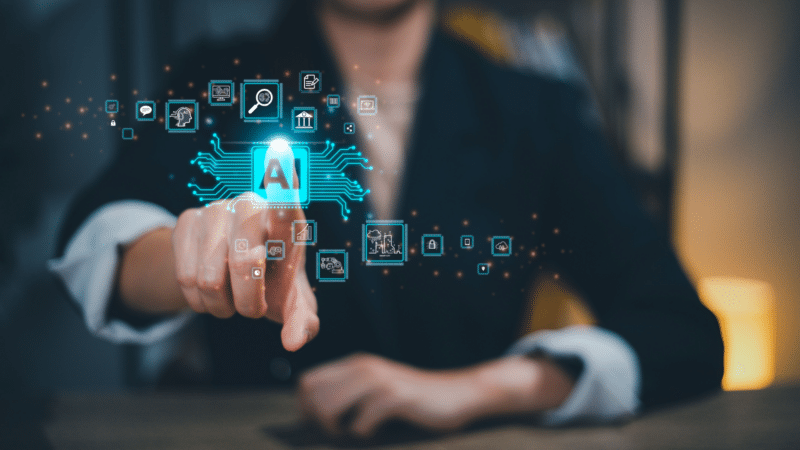

While the Messenger Bot revolution never took hold like Meta might have hoped, bots are still widely used, in a range of contexts, with many brands now implementing responder bots in messaging apps to streamline their customer connection process.

And this could help further bot use. Today, Meta has released BlenderBot 3, an advanced bot responder dataset, which is able to engage with humans in a more natural way while also utilizing more prompts to guide users along a specific path of inquiry.

As explained by Meta:

“BlenderBot 3 is capable of searching the internet to chat about virtually any topic, and it’s designed to learn how to improve its skills and safety through natural conversations and feedback from people “in the wild.” Most previous publicly available datasets are typically collected through research studies with annotators that can’t reflect the diversity of the real world.”

Which is the real purpose of this release – by giving the public access to the BlenderBot system, and enabling them to ask questions in the app, that will then give Meta more feedback on how to refine and improve the system, with a view to building a more realistic, organic simulator of conversation and engagement.

Which could have a range of purposes, and could again make it much easier for brands to maintain their connection flow, with fully automated bots that are able to respond to user queries 24/7, and direct people to the right products and services to suit their needs.

The updated BlenderBot process combines two recently developed machine learning techniques, SeeKeR and Director, to build more advanced conversational models that learn from interactions and feedback.

“BlenderBot 3 delivers superior performance because it’s built from Meta AI’s publicly available OPT-175B language model — approximately 58 times the size of BlenderBot 2.”

The idea is that this next-level system will be able to build on this engagement to iterate even faster, and become a more functional base AI for conversational systems moving forward.

Though there are also risks with public testing of such.

Back in 2016, Microsoft released its conversational AI system ‘Tay’ for public testing, via a dedicated Twitter account that invited Twitter users to interact with the bot, and help it learn conversational patterns. Within a day, Twitter users had the Tay account sharing an array of lewd and racist remarks, which forced Microsoft to shut it down, never to be heard from again.

Meta is well aware of this risk, and it’s built in various safeguards, which could see some of BlenderBot’s responses go off-topic. But it will avoid moving into risky territory wherever it can.

It could be a big advance for AI systems, and it may well be worth checking it out to see how well the process actually handles engagement – and to consider whether it might, eventually, be valuable in your own customer service process.

Those in the US can try it out here, where you can engage in a conversation with BlenderBot and provide feedback on the quality of the experience.